Over the last two weeks, our team has been reading article after article trying to digest alllll the latest Google news. Between AI Overviews, Google Marketing Live, and the Google algorithm document leak, we’ve had our hands full.

We’ve covered Google Marketing Live and AI Overviews, so now it’s time to turn our attention to the Google algorithm document leak. Don’t worry, we’re not going to deep dive into the more than 2,500 pages discovered online, but we are going to share the seven most fascinating tidbits we ran across and how they could impact your SEO and overall marketing efforts.

Contents

The Google algorithm document leak: an overview

But first, a little backstory on what happened.

Documentation from Google Search’s Content Warehouse API was published by an automated bot on Github. This documentation included over 2,500 pages of more than 14,000 attributes that Google measures, or can measure, as part of its algorithm.

Rand Fishkin, co-founder and CEO of SparkToro, was approached by a then-anonymous source (he has since come forward) about the content and authenticity of these documents. He shared findings from that conversation as well as his analysis here.

After countless publications covered the documentation leak with nary a word from Google, Google verified the documents are real on May 29.

This leak provides never-before-seen access to the inner-workings of Google’s algorithm—the main takeaway being that Google hasn’t been entirely truthful about what’s in the algorithm over the last few years.

One important thing to note: While the leak included documentation about metrics that Google either is collecting or has collected in the past, it did not reveal the weight of those metrics or whether or not they’re currently being used as ranking factors. So while we know that Google has the ability to measure metrics like domain authority and clicks (which we’ll get into later), we don’t know exactly how important those metrics are to rankings from this source alone. And, of course, Google makes changes to its algorithms all the time.

While we’re not going to stake our whole strategy on the findings, it does provide some very interesting insights into how Google works.

7 things Google can measure that might affect your SEO

There were some surprising metrics Google is measuring, according to the leaked documents. We’re sharing seven that stood out to us and what that means for your SEO strategy.

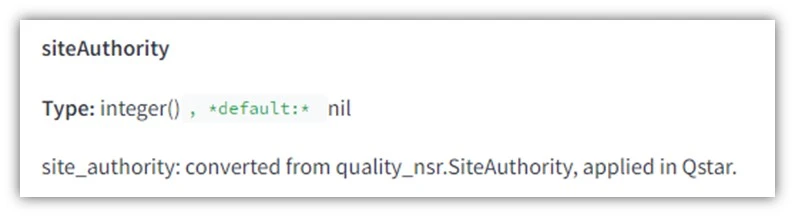

1. Domain authority

Previously, Google representatives have said that Google does not measure or consider domain authority at all. They claimed that they don’t take the overall quality or authoritativeness of your site into account when ranking individual pages. However, the leaked documentation painted another picture.

“In reality, as part of the Compressed Quality Signals that are stored on a per document basis, Google has a feature they compute called ‘siteAuthority,’” writes Mike King of iPullRank.

What this means: Your domain authority is being measured by Google. Again, we don’t know exactly if or how important it is to ranking, but Google is looking at it in some way. This means you need to have a well-built website with high-quality, authoritative content, plus credible links to your site that prove your content is valuable. Find more ways to increase domain authority here.

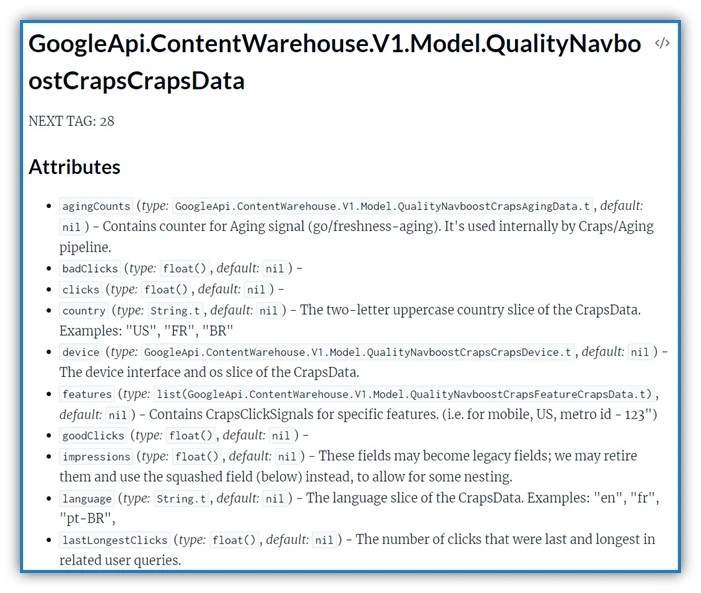

2. Clicks and engagement

Not only does how many clicks you’re getting matter, but also the quality of clicks you’re getting from your organic rankings. Google has previously said (over and over, in fact) that clicks weren’t a ranking factor, although many SEO experts have long debated whether or not that was true. Recently, information came out during the Google antitrust trial revealing that Google’s Navboost system, based on click quantity and quality, is “one of the important signals” in ranking sites.

So this inclusion isn’t necessarily surprising at this point. But it is interesting to see that Google measures clicks in a variety of ways, including badClicks, goodClicks, lastLongestClicks, and unsquashedClicks, according to the documents.

What this means: Focus on driving more successful clicks by broadening the amount of high-intent queries you target, and make sure the content on those pages is truly helpful to users. Useful content and strong UX are the best ways to send healthy engagement signals to Google.

“A focus on driving more qualified traffic to a better user experience will send signals to Google that your page deserves to rank,” writes Mike.

🔍 Find high-intent keywords your content strategy can target with our Free Keyword Tool.

3. Content freshness

Content recency and freshness is another metric Google is measuring as part of its algorithm. There are many references to content publication and update dates within the Google API documents. In fact, Google reviews dates in the byline (bylineDate), URL (syntacticDate), and on-page content (semanticDate).

What this means: The more recent and relevant your content, the better. To keep your content fresh, it’s necessary to check in regularly on the topics covered on your website to see if there’s any outdated information.

For example, you could use a marketing planning calendar to take note of any significant dates that may require your content to be updated and plan new content to keep your site fresh. Let’s say your industry is real estate. In that scenario, you’d want to update your site to reflect the latest interest rates.

4. Change history

While Google cares about recency, it also would know if you were lying about it. It was found in the documents that Google keeps copies of multiple versions of all indexed pages. Essentially, Google could recall all changes made to a page over time. That said, it will only use the last 20 changes of a URL when analyzing.

What this means: The fact that Google is taking your pages’ change history into consideration means you may want to be cautious when considering a series of changes to a given page. Making tiny changes to try to trick Google into thinking your content has been more dramatically updated could actually hinder your performance.

For example, if you were to update a blog post’s publish date when the content is basically the same, that could hurt rather than help your rank. In short, try to keep your content truly fresh and up to date. Quality and accuracy matter more than a date on the page.

5. Originality

“Pages with little content,” as it’s put in the documents, get an OriginalContentScore. This score indicates that the less content you have on a page, the more original and unique it should be.

While there’s no metric that measures character count, it’s clear that Google is looking for any short-form content to have an added dose of authenticity.

“Thin content is not always a function of length,” writes Mike.

What this means: If your content tends to be shorter, then you’ll want to ensure it’s unique and original. Try not to rely heavily on generative AI for your content. Also, see what types of content your competitors are putting out to pinpoint ways you can make your business stand out content-wise.

6. Site size and age

The Google “Sandbox” is an SEO industry term used to describe the phenomenon of newer, smaller sites needing to go through a kind of waiting period before their pages rank. Google has previously denied there being any sort of Sandbox, but there are factors mentioned in the documents that do look at site size, like smallPersonalSite, and age, like hostAge. However, it’s unclear whether this could boost or demote a site.

What this means: A common small business challenge is being able to rank on Google quickly. It can be a long, slow road, so be patient and don’t take shortcuts. Focus on building your brand over time. In the meantime, look for other ways to reach your target audience, such as building an email list, promoting your business on social media, running display ads, and more.

“For small and medium businesses and newer creators/publishers, SEO is likely to show poor returns until you’ve established credibility, navigational demand, and a strong reputation among a sizable audience,” writes Rand.

7. Chrome data

It’s no secret that Google is able to measure data from its own online browser, Chrome. That data could be impacting your SEO rankings, however, because it was found in the Google API documents that the number of views from Chrome attributed to both individual pages and entire domains are being tracked.

“My read is that Google likely uses the number of clicks on pages in Chrome browsers and uses that to determine the most popular/important URLs on a site, which go into the calculation of which to include in the sitelinks feature,” writes Rand.

What this means: The more popular your pages are on Chrome, the better. Chrome owns 66% of the global internet browser market share, and it’s by far the most popular browser in the U.S.

Having a site that your users love—even if they find it through other means than search—is likely good for your SEO in general, because Google knows if your site is getting traffic and engagement.

While it may feel tempting to give up on SEO amidst all this recent Google news, a strong online presence is as important as it ever was. If you can optimize your pages to be what users from Chrome and other pages really want to click, it can also benefit your overall site rank.

Staying ahead of the latest Google algorithm ranking factors

The moral of this latest Google news story? The leaked Google API documents remind us to stay focused on what we always knew to be true: building site content that makes our audiences happy is what will ultimately bring us success. Plus, being mindful that Google doesn’t always tell the truth about what it measures and what works is key.

If you still feel like your search marketing and advertising is struggling to maintain growth during these times of constant change from Google, you’re not alone. See how our solutions can help you maximize your SEO and search ad campaigns for sustainable growth no matter what Google throws your way.

Big thanks to Mike King of iPullRank, Rand Fishkin of SparkToro, and countless other sources for their in-depth coverage and analysis!

![Search Advertising Benchmarks for Your Industry [Report]](https://www.wordstream.com/wp-content/uploads/2024/04/RecRead-Guide-Google-Benchmarks.webp)