When website traffic drops, and it’s clear that you were not the cause of it, it’s time to point your finger at Google, rant, and maybe even cut down that 6000 word rant into an informative 3700 word blog post. This SEO is spending Christmas Eve with Google so hopefully you won’t have to.

This is the type of early Christmas card you would not want from Google.

“It’s probably that darn Google Panda,”

“I feel like it’s Penguin and negative SEO!”

or

“It might be those directory submissions/paid links that so-and-so did in the past”

Before you go on a zealous “Google is evil” blame rampage, I understand that there’s a ton of ways to blame Google (or someone else) without proof. However, empty accusations will not get you your traffic back. Attributing traffic loss to a Google algorithm update is no easy task. In this post, I’ll outline a bunch of tools, resources, methodologies, and know-how you can use to evaluate potential Google algorithm updates and refreshes* that could be responsible for your sudden loss of traffic.

Warning: To make this post digestible, I’ve excluded things that I consider “features” of Google Search. When Google updates these features, you could either gain or lose traffic as well.

Table of Contents:

Step 1: Gathering News on Google SERP Volatility

- Tools:

- MozCast

- SERPmetrics

- SERPS.com

- News Sources:

- Authoritative search engine marketing news websites

- Webmaster World discussions

- From Google

Step 2: Picking the Right Dates to Analyze

- The art of framing the question and measuring the right stuff

Step 3: Identifying Your Most Impacted URLS

- How to export, list, and rank landing pages with significant traffic loss

Step 4: Match Your Landing Page to That Google Algorithm

- Algorithm Updates:

- Google Panda

- Penguin

- Exact Domain Match Update

- Pirate Update

- “Top-Heavy” Update

- 7 SERPS Update

- Vince Change

- Brandy Update

- Unknown Updates/Changes

- Google Features

- Expanded Sitelinks

- Universal Search

- Personalized Search

- Google Instant

- Freshness Update (AKA Caffeine)

- Real-time Search

- Knowledge Graph

- Google Authorship

- Social Signals

- Local

- Venice Update

- Google Places

- Negative Reviews

- Google Mobile Search

Step 5: Don’t just Blame Google, Do Something!

- Prepare your content for the next Google Search trends.

Step 6: Diversification is the Best Long Term Solution

- Non-Google traffic alternatives

TLDR; If you have the SEO ninja powers to understand or foresee what Google update you have been hit by, you can remedy or set long-term plans where you won’t be affected by Google’s periodic whims!

Tis The Season to Chase Google Algorithm Updates

While the holiday season is notorious for seasonal SEO traffic changes for B2B and B2C keywords alike, I was seeing unusual traffic changes that started on December 5th, and continued on the 10th and 13th. This was odd, since it wasn’t just the traditional week before Christmas where vacation days aren’t uncommon.

12/18/2012 Update: A Google spokesperson says there was no Google Algorithm “update” on the 13th. The rest of us are certain that there was some kind of change or a series of changes – this wouldn’t be the first time we’re having trouble interpreting Googlespeak. For example, what SEO’s named the “Vince Update,” Googlers would simply consider a small change to the overall algorithm. Personally, I think Google’s either making a series of changes (they make over 500 a year) or running tests (on any given search query from an average user, there may be 10 elements being tested).

One may be a fluke,

Two is a trend,

Three means we better figure out what’s going on.

–Wise Words of Larry Kim

Now without further ado, let’s dig into the analysis. You will need:

– A website whose organic traffic just recently declined

– A browser capable of private/incognito/InPrivate/anonymous search. The latest editions of Firefox, Chrome, and Internet Explorer all allow you to browse the web anonymously.

– A Google Analytics account correctly linked to said website

Optional: A backlink tool such as Google Webmaster Tools, Majestic SEO’s Site Explorer or SEOMoz’s Open Site Explorer.

Step 1: Gathering News on Google SERP Volatility

If there truly is a Google algorithm update or change, chances are you’re not the only one with altered search engine results pages (SERPs). Here are the places I checked to see if I’m just being the boy who cried wolf:

SEOMoz’s handy-dandy MozCast tool tracks the SERPs on over 1,000 hand-picked, non-local keywords and displays ranking volatility through cute-looking weather forecasts. The more search results are changing, the hotter the weather forecast becomes. Search enthusiasts, I encourage you to understand the source of this data, and familiarize yourself with the other concepts such as SERP count, EMD and PMD tools offered.

December 10th just so happens to be one of those stormy days – check.

SERPmetrics

SERPmetrics uses a different scoring system to calculate what they call “flux,” which is the average of all SERP movement they track over their last period, weighed towards the top end. Slightly different methodology than the MozCast, but same concept, AND we’re seeing the same trends.

After December 10th, SERP flux goes upwards – Double checked.

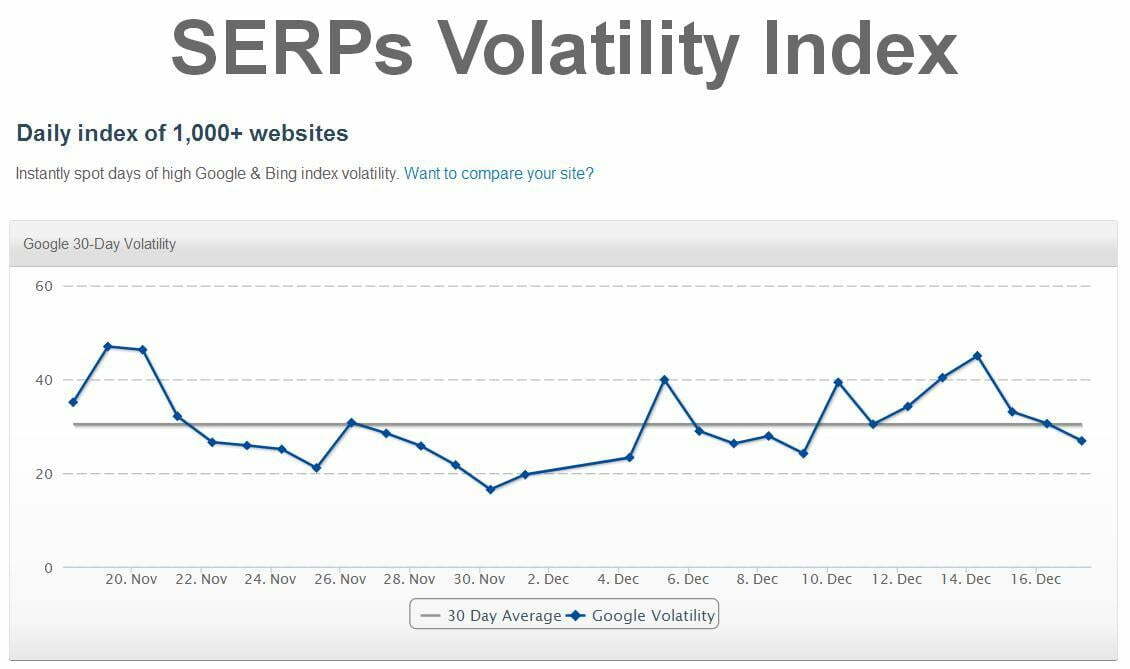

SERPs Volatility Index

SERPS.com creates their own SERPs Volatility Index with their own sample of 1000+ websites, as opposed to hand-picked keywords from select industries. What’s nice is that there’s even an average volatility to help you gauge whether a trend appears significant. Special thanks to Scott Krager for spending some time explaining how the index works.

After December 10th, SERP volatility trends upwards beyond the average date – Ding ding ding!

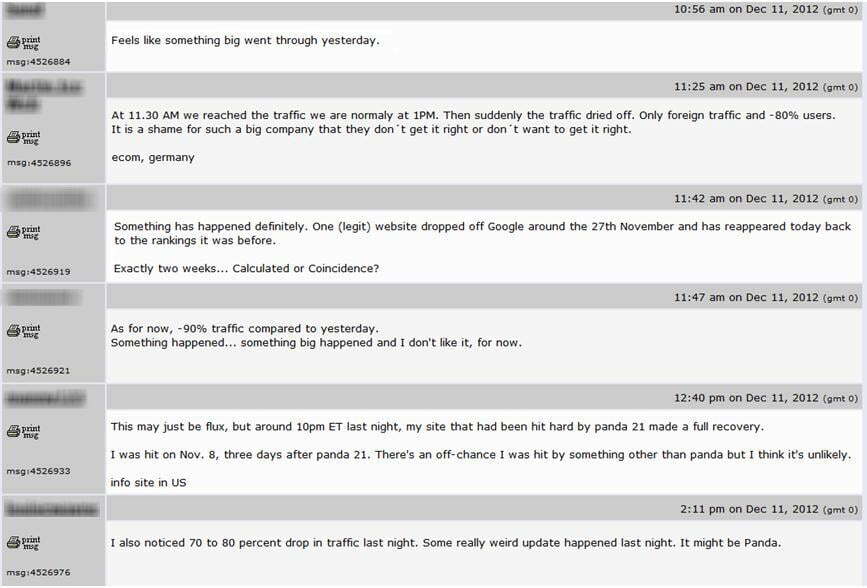

Webmaster World Forums

Leave it to the webmasters of the world to tell you if they’re also freaking out. The Google SEO News and Discussion section in Webmaster World Forums is a goldmine for news and insights. If you would like to comment and add to the discussion, please remember to stick to the facts and not your emotions.

70-90% drop in traffic? WordStream must be incredibly lucky! I’m not the only webmaster freaking out? Looks like this will make a nice story.

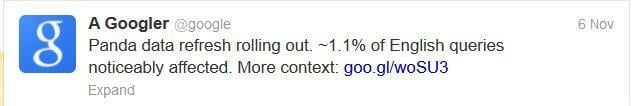

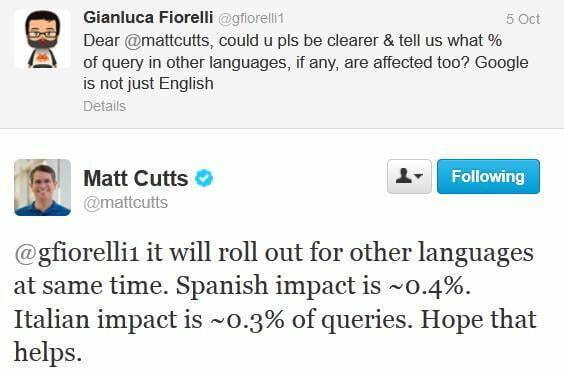

Search Marketing News Sources: SEL, SEW, SEJ, SERoundtable, and Google

When it comes to confirming a Google algorithm update, these news sites are usually the first to comment on the impact. However, note that their specialty is in news reporting, not speculation – so it’s nice to get to the source – Google. If you’re not already following A Googler or Matt Cutts on Twitter, it’s a smart idea to subscribe to Google’s search blog.

@Google is Google’s official Twitter account.

The distinguished Google Engineer Matt Cutts is well known for his webmaster videos on YouTube and answering the question of website owners.

Google has become increasingly transparent about the changes they make in their search algorithm. The only problem is that many of them are cryptically named updates like Porky Pig, smoothieking, and old possum.

Important Disclaimer: Just because it’s not on the news, it doesn’t mean that one of Google’s many search algorithm improvements didn’t impact your web traffic – even if your overall organic traffic stays constant, it’s very likely that huge SERP movements will negatively impact some of your content while helping others. Step one is purely exploratory to help you test the waters.

Step 2: Picking the Right Dates to Analyze

Comparing the traffic change date ranges correctly to a good benchmark is practically half of this exercise. With experience, you’ll get a sense of how you’ll want to analyze your data.

1. Because our organic traffic follows a weekly cycle, I could compare the current traffic drop to the same date the prior week, or two weeks prior (use your judgment here).

2. I always want the highest precision possible to minimize data sampling error.

Pro Tip: Always be weary of year-over-year trends (i.e. comparing a non-holiday to a holiday range) and one-time special events (presidential election) from your results. Use your judgment on what makes sense – a bad range will show misleading results.

For my own analysis on what happened on December 10th, I used the traffic data two weeks prior to the suspected algorithm changes. I had reason to believe that something fishy may’ve occurred on the 5th, so I did not want to analyze traffic changes that could’ve been caused by multiple updates. There is no perfect or best date ranges to analyze, but there certainly are darn good ones with good reasoning behind them and this is one of them.

Step 3: Find the Offending URL’s By Traffic

Ever since SEOMoz, Raventools, and even Ahrefs have been getting slapped by Google’s API legal terms enforcement team, many of us have lost faith in rank-checking tools. Time to go back to checking good-ol’ traffic folks via Google!

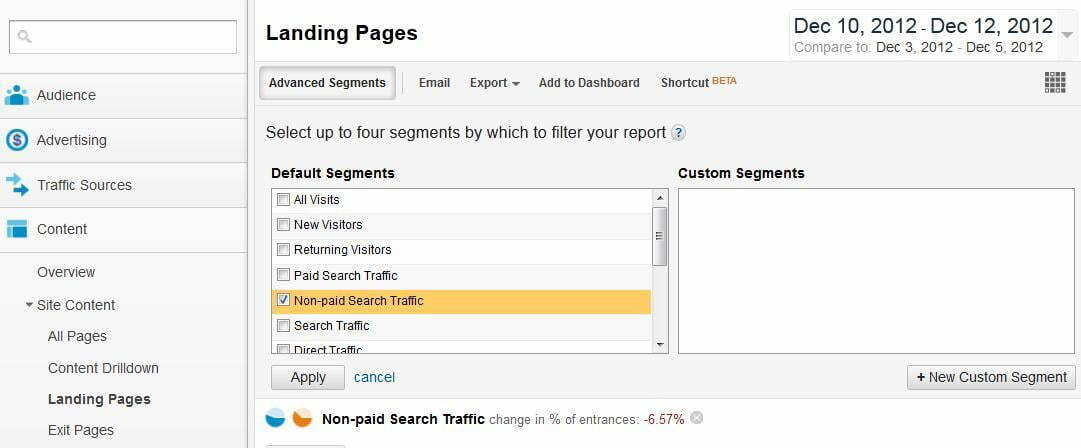

1. Log onto your Google Analytics

2. Dig into your Standard Reporting with Content à Site Content à Landing Pages and adjust the dates and rows shown (I like to maximize it to 500). Also make sure you have the Advanced Segment “Non-paid Search Traffic” highlighted.

3. Export the data into an editing friendly format. Excel users, I recommend using the file types .csv and .xlsx. Hipsters, you’re free to use Google Spreadsheets.

4. Find the traffic lost between the period where you believe there’s an algorithm update to the benchmark period prior for each landing page.

4a. Curse at how unfriendly the data format is to do this.

5. Rank the landing pages by the total traffic lost!

5a. Curse more if you’re not very good at Excel’s List and Filter function.

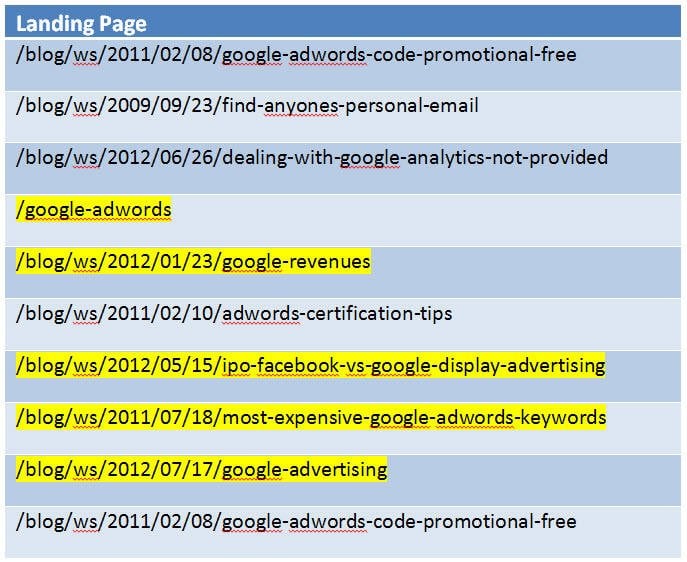

Here are the top 10 landing pages that were impacted by December 10th:

6. Group similar pages together and make a hypothesis. Seeing that five out of ten of our top traffic loss landing pages was related to our infographics – content that accrued a large number of anchor-text optimized links in a short amount of time – I first suspected that this was a Google update on links, anchor text and/or semantically related keywords.

Step 4: Gather Up the Clues, Crack the Case.

This is where SEOs pats themselves on the back and remember why we love our job. Tracking Google algorithm updates to website traffic is like playing detective searching for clues that point to the culprit. Usually it makes sense to start with your hypothesis (you want to hone your SEO senses) but let’s start with the most popular suspects.

These Google updates are the most like culprits for the traffic loss. (If you need more technical SEO interview questions, any of these algorithms are fair questions to ask an SEO!)

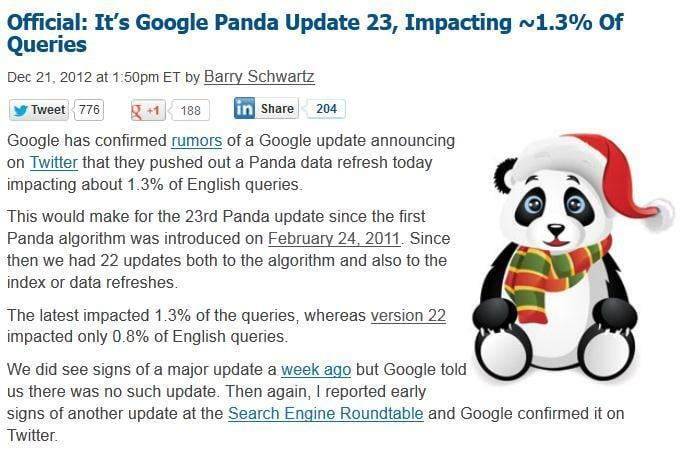

Google Panda Update

Google Panda defined: Google’s Panda algorithm cracks down on thin content/shallow content, sites with high ad-to-content ratios, and other signals that indicate low web content quality. To date, Panda has been updated over 20 times, and cumulatively impacted over 20% of all English queries.

Why so many updates? Googler Navneet Panda didn’t just build an algorithm – it was a machine learning system. Panda is an algorithm with an AI that improves over time. The scariest thing of course, is that Panda is a site-wide penalty.

One of the few Google algorithms you can put a face to.

Algorithm assessment: The easiest way to check for a Panda update is to see if all of your web pages are seeing significant traffic decline. The next step is to assess just how useful your website content is. The WordStream landing pages affected are all long, in-depth articles with original perspective. We were not hit by Panda.

Pro Tip: Article submission websites, which were once touted as an SEO best practice, were negatively impacted by the Google Panda update. These websites were rarely ever visited by real people, and were essentially just a web content and links middleman intended to manipulate Google search engine rankings. Keep your web content unique, useful, and thoughtful and you’ll be clear off the path of the Panda’s wrath.

Google Penguin Update

Google Penguin defined: The Google Penguin algorithm (AKA Webspam Update) is notorious for the heavy crack-down it does on websites engaging in linking practices intended to manipulate search engine rankings, but also includes SEO techniques that violate Google’s Webmaster Guidelines. These techniques include keyword stuffing, cloaking, sneaky redirects/doorway pages, purposeful duplicate content, and others. Like Panda, Penguin rolls out in iterations, and I believe the penalty is site-wide as well, but it has not been confirmed by Google.

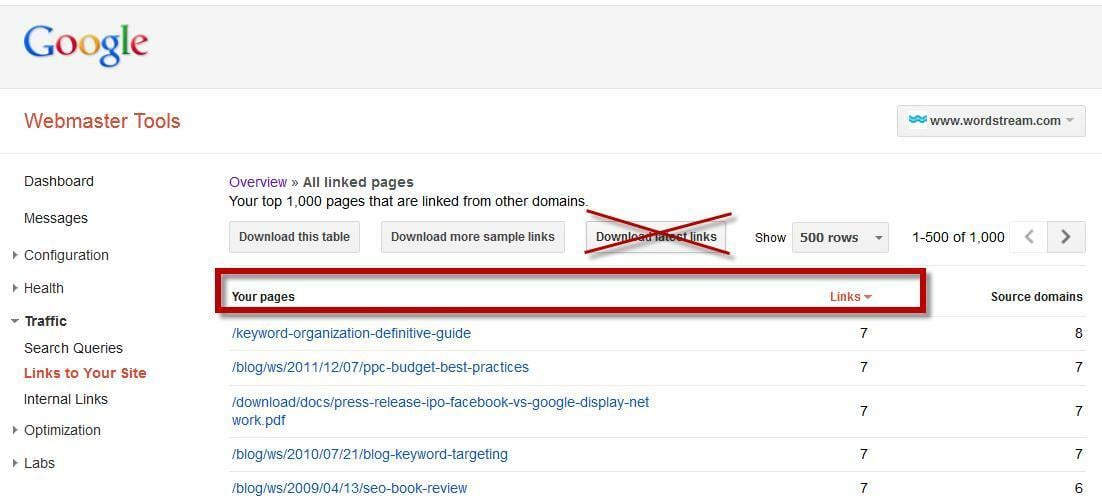

Algorithm assessment: First, I looked to see if these landing pages are somehow getting linked by websites from bad neighborhoods (e.g. porn/gambling sites). To do this, I logged into Google Webmaster Tools/Open Site Explorer/Majestic SEO and delved into each page’s link profile. Actually visit the sites that are linking to the page if you don’t know them, and then look for patterns. It’s not always the latest links that occur that bring down your traffic – old links are sometimes lost, broken, or hacked into doing something else.

Seeing that all 10 landing pages were getting URLS from different, trustworthy domains (with a mix of ugly scraper sites in each) and generally had healthy links (e.g. links from the New York Times), I knew we were not being subjected to spammy link practices.

Pro Tip: Link tracking tools are necessary to find out if your website has been participating in spammy link practices in the past, or has been the target of a negative SEO attack. The more advanced user will also look at the ratio of varying anchor texts that lead to your landing pages. Visit Google Webmaster Tools, Open Site Explorer, and Majestic SEO to learn more about them.

If you’ve knowingly attempted to manipulate search engine rankings through other means that are in Google’s Webmaster Guidelines’ grey or red zones, this tutorial is simply too basic for you 😉

Exact Match Domain Update

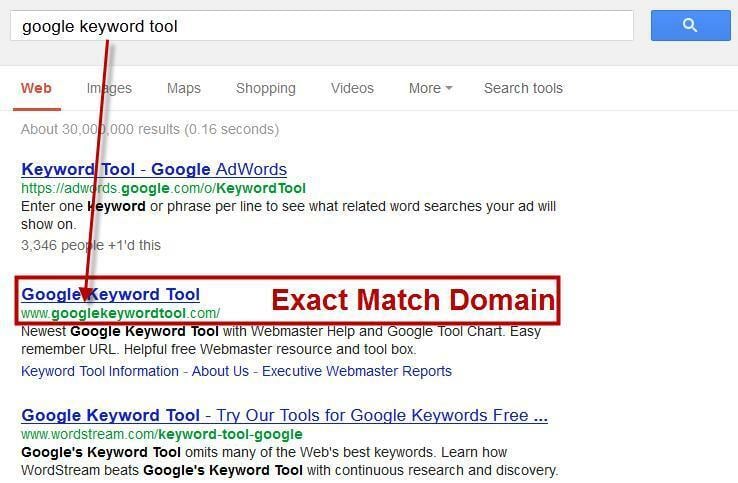

Exact Match Domain defined: The Exact Match Domain (EMD) algorithm update was created to prevent SERP domination from domains that are exact matches to the keywords they were targeting. Typically, domains struck by this update have thin content that’s considered low-quality. The EMD update also rolls out periodically like Penguin.

Algorithm assessment: I started by using Google Search with a browser in private browsing mode. If you search for the main keyword variations that are driving traffic to your landing pages and you see competitors with exact match domains beating you in the SERPS, you’ll know that they’ve either improved their page or Google made an exact match domain algorithm change. From my perspective, it looks like an exact match domain algorithm refresh since their website content seems unchanged.

Unfortunately for us, googlekeywordtool.com is a no-nonsense navigational toolbox which people continue to use in order to access Google’s AdWords Keyword Tool – we’ve been head to head with this URL for a while.

Pro tip: Be extra careful with typing in your query, as Google results are known to change as you retype mistakes. (Yes Google is that darn smart when it comes to personalization) and note that this method isn’t perfect as it is still being adjusted for your connection geography. If any of your links show up as purple (clicked in the past) you’re doing it wrong!

Pirate Update (DMCA Warnings)

Google Pirate defined: When Google receives a Digital Millennium Copyright Act (DMCA) take-down notice from a copyright owner on some questionable content hosted on Google, Google listens in two ways. First, copyrighted content is removed at the copyright owner’s demands if there’s a clear violation. Then, if a website accumulates an unhealthy number of DMCA take-down requests, the Pirate Update penalizes the whole website from ranking well on Google.

Algorithm assessment: Unless you’re a black hat spammer stealing someone else’s content as your own, running a file-sharing site without copyright enforcement, or some other internet crime syndication platform, I wouldn’t worry about Pirate. For most of us out there, before you make the mistake of grabbing “free” images on the web, it’s a good idea to check to see if it has some sort of licensing.

Pro tip: If you can’t find the source, use a reverse image search tool to help you make informed decisions without getting in trouble with the law. If you plan on optimizing images for image search, it’s best to own unique images.

“Top Heavy” Update

Top Heavy defined: The Top Heavy Google update penalizes websites with too many ads being shown to a user when they first enter their website. There have been reports of regaining rankings when excessive ads were removed and the website was revisited by the top heavy algorithm. Like the EMD algorithm update, Top Heavy also periodically refreshes.

Here’s a layout of a top-heavy website.

Algorithm assessment: Unfortunately, WordStream is not involved in affiliate advertising to comment on this update.

We’ll welcome the expert bloggers of our site to comment on their experiences with the Top Heavy update.

7-Result SERP Update

7 SERP defined: The 7-Results SERP algorithm update isn’t a penalty per se, but a new feature Google uses to present information to the searcher. Typically, the usual page with 10 search results gets truncated to 6-9 results on the first page (7 is the most common occurrence) and the top SERP is a Google sitelink. If you need another reason why rank-checking for SEO should die, here you go. However, the topic of rank-checking is another debate for another informative post!

Algorithm assessment: Of the landing pages impacted, only our /google-adwords page has been impacted by the 7-Results SERPs, and it pre-dates December 10th.

However, a quick check reveals our Google AdWords page has two new competitors – the New York Times and Search Engine Land… fishy!

Pro Tip: Generally speaking, 7-Results SERPs occur on navigational queries which have a result with expanded sitelinks. If you’re competing on a branded terms, this would be an update to keep an eye on.

Vince Change

Vince Change defined: Also known as the “Google favors brands” update, back in 2009, a Googler named Vince factored in more trust (quality, PageRank, reputation, authority, and other metrics) into the algorithm for general queries. As a result, webmasters were seeing big brands gain a lot more ground than small brands in the SERPs. Note that Vince does not impact long-tail queries.

Case study analysis: The keyword “Google AdWords” is a prime example of how different brands have different strengths when viewed from a Vince perspective. The New York Times was able to penetrate the SERPs of an already heavily branded keyword due to having great online authority (PR9, quality news, and many trustworthy authors) and break through Google’s branded AdWords wall of defense. WordStream is definitely seeing something that suggests a Vince-like update, since most of the pages updated target “Google” or “AdWords” keywords.

Pro tip: Big brands can easily bully you out for generic keywords. Either build your brand and become one of them, or focus on the long-tail. To become a big brand on the internet, you’ll need to build amazing backlinks.

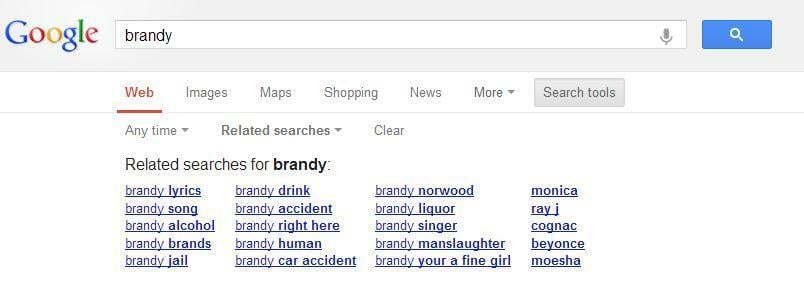

Brandy Update

Brandy Update defined: Going even further back to 2004, this update gives additional weight to keyword synonyms and other semantically related keywords. This was also when anchor text variations and the “neighborhood” of pages with inbound links started being considered as important ranking factors.

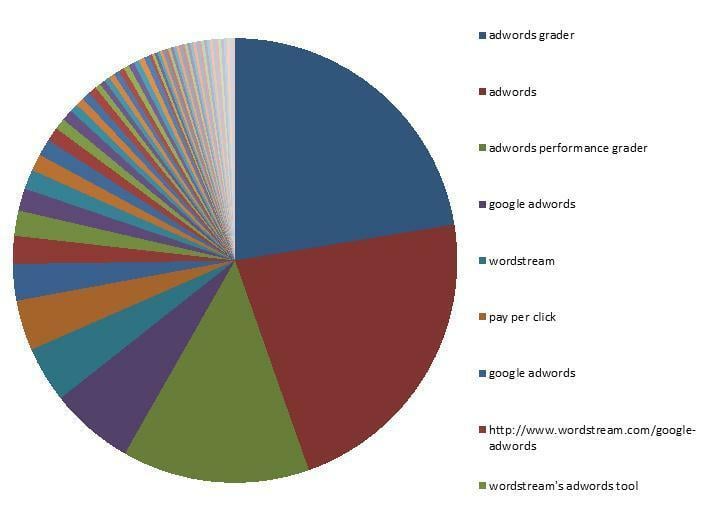

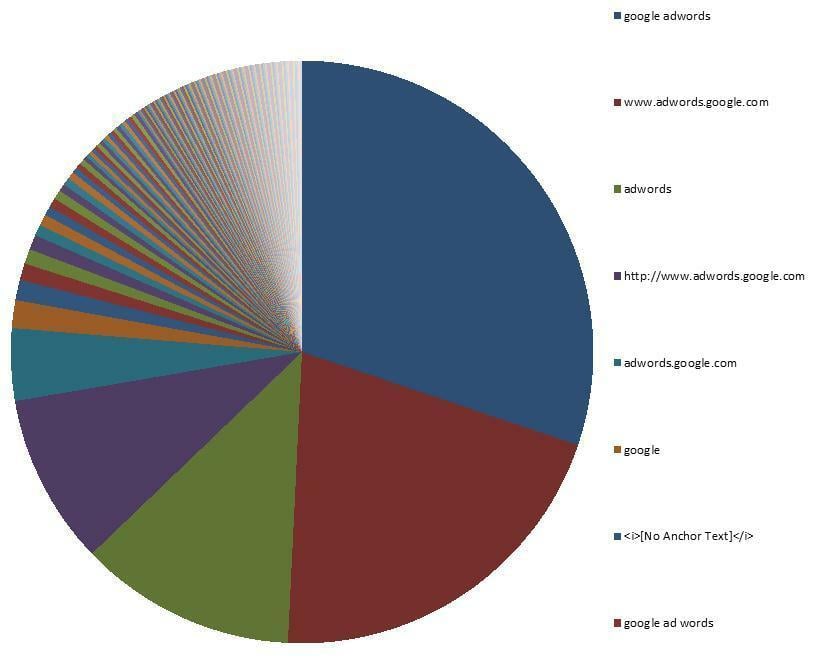

Case study analysis: If you’ve looked for the big Penguin in terms of link neighborhoods and webspam such as keyword stuffing, Brandy will remind you that semantics are important on your website content, internal links, and even the anchor text of other websites linking to your content. Having used infographics as a means to garner links and co-citations from news sites, I knew that the embed codes of our Infographics could be creating an unnatural looking backlink profile. However, this doesn’t rule out the possibility that less weight may be given to links acquired through infographics. Anchor text distribution was a legitimate area of concern for us.

If the main keyword you’re targeting is “Google AdWords” but a lot of incoming anchor text links say “adwords grader” instead, it made sense as to why we were losing ground on Google AdWords.

A natural link profile will have branded hyperlinks, full urls, missing anchor text, and sometimes even misspellings. A “normal” link profile will vary by the competition in a particular keyword space.

The charts above was created with Open Site Explorer data. If you’re looking for free tools, I’d recommend a simple ahrefs account. All of their features should be fully functional till mid-January, which is when Google will force them to comply with the newest API guidelines. Alternatively, backlinkwatch.com is also an option – it’s slower than a paid tool, filled with annoying ads, but it’s hard to beat free.

Pro tip: If you’re budget strapped, brain dead or don’t have top-notch keyword research skills, you can always use Google’s Related searches tool as a crux. Otherwise, use WordStream’s Keyword Tool to find semantically related keywords. Before you start stuffing these keywords into your article, please hire a writer to do it in a manner that is natural and elegant.

Unknown Updates/Changes

I’m sorry, but Google is a black box and we can’t know everything about Google. There are plenty of algorithmic reasons as to why you may have lost website traffic that I haven’t described here, and only Google knows. Sometimes it’s suspected that there is more emphasis on trust signals like AuthorRank, other times it’s a focus on keyword semantics or the incorporation of social signals – it’s impossible to pinpoint exactly what is going on by yourself, so make sure you analyze, test, and cross-check with webmasters around the world.

Step 5: Don’t Just Blame – Have an Action Plan

I hope you now have a better idea of a few parts that goes into Google’s algorithm, and how to attribute the traffic loss your top pages are experiencing. However, don’t just stop there – you weren’t hired to whine!

At WordStream, we’ve already found ways to diversify incoming link anchor texts, create better lists of semantic keywords to our content writers, and shift our content focus away from infographics in preparation of Google’s current and future algorithm shift.

If it all works out, we’ll have recovered and bested Google’s algorithm for the long run!

Step 6: The Long Term Online Traffic Strategy Is Always to Diversify

If Google pulls a quick one on your organic traffic, is your business dead? For the long run, Google’s bound to make algorithmic updates that will cause your traffic will fall whether you like it or not.

To minimize the impact of this loss, it’s a great idea to invest in social media marketing for a steady stream of referral traffic, email marketing for direct traffic to your website, or God forbid you forget PPC for a steady stream of qualified leads.

Online marketing is a marathon, and I sincerely thank you for running with us.

Victor Pan is WordStream’s resident search samurai. When he’s not busy gathering and analyzing web data, he’s legitimately practicing the way of the sword, kendo.